Welcome to ML Labs

A comprehensive Machine Learning framework architected by Kuriko IWAI

This website hosts a complehensive framework on the entire machine learning lifecycle - from algorithmic deep-dives to robust MLOps exercise.

Explore:

- Masterclass: Build eight AI systems to master LLM techniques.

- ML Theory: Technical blogs on LLMs, Generative AI, Deep Learning, and traditional ML.

- Learning Scenario: Specialized research into Unsupervised, Reinforcement, Meta, and Online Learning.

- MLOps: Best practices on CI/CD integration, ML Lineage, and system architectures.

- LLM & NLP: Advanced LLM engineering techniques and neural architecture deep dives.

- Labs: Experimentations on ML systems with walk-through tutorials and code snippets.

- Solution: ML system and ETL pipeline engineering, AI audit services.

What's New

Get 5-Point AI Security Checklist:

AI Engineering Masterclass

Module 1

The LLM Backbone: Building a RAG-Based GPT from Scratch

Explore the core mechanism and hands-on implementation of RAG, tokenizer, and inference logic.

PyTorchTensorHuggingFaceTransformersDecoder-only LLMCausal InferenceWARCStreamlituvYou'll Build: Website Summarizer with LLM Configuration Playground

Production Goals:

Implement custom BPE tokenizer, logits adjustment, and major decoding methods.

LLM Techniques to Master:

- Perform Common Crawl & Heuristic filtering.

- Build a BPE tokenizer to map text to tokens.

- Adjust logits via logits bias, temperature, and repetition penalty.

- Interactively apply stochastic/deterministic decoding methods.

- Deploy the inference via an API as a microservice.

Agentic AI framework

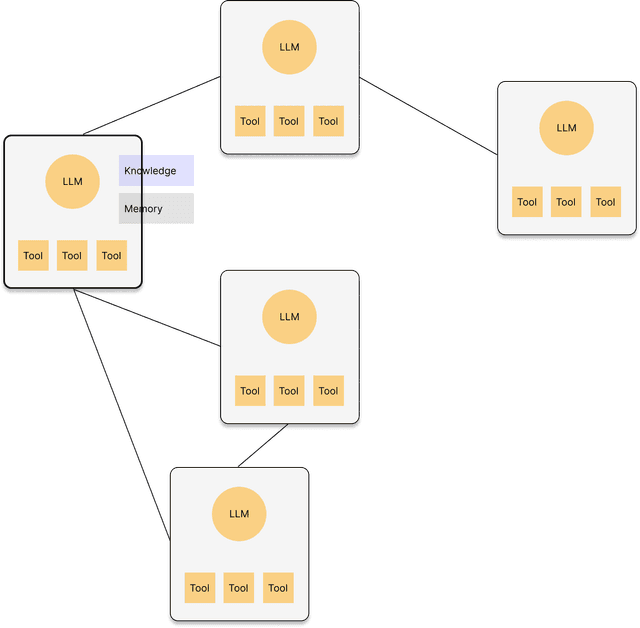

versionhq is a Python framework for autonomous agent networks that handle complex task automation without human interaction.

Key Features

versionhq is a Python framework designed for automating complex, multi-step tasks using autonomous agent networks.

Users can either configure their agents and network manually or allow the system to automatically manage the process based on provided task goals.

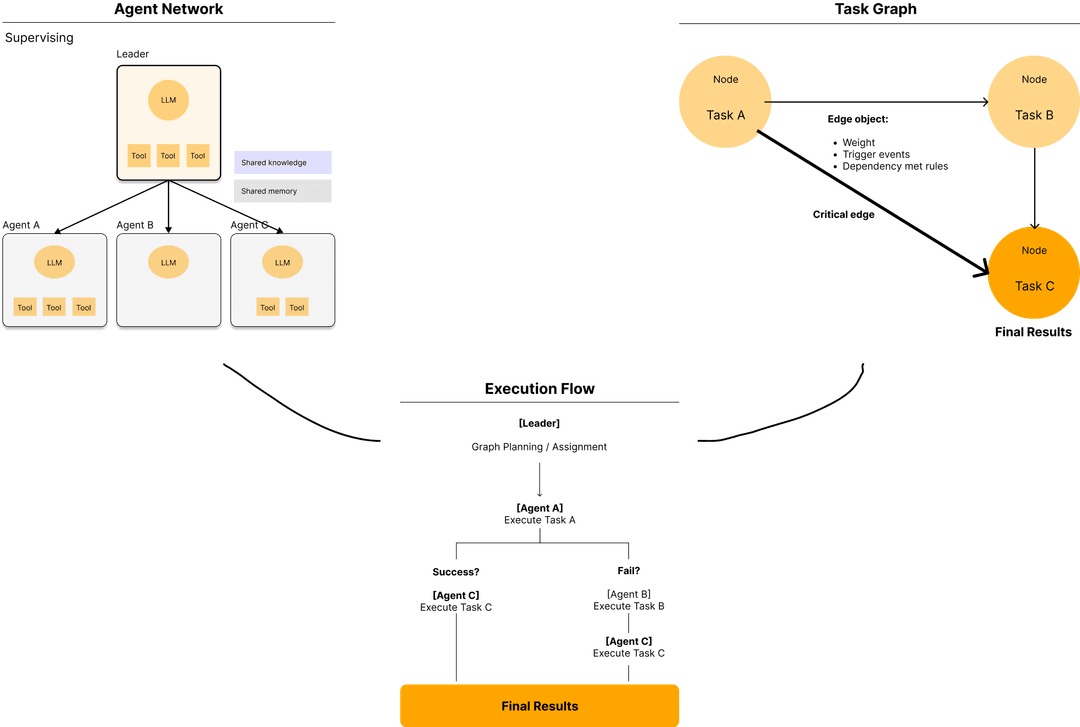

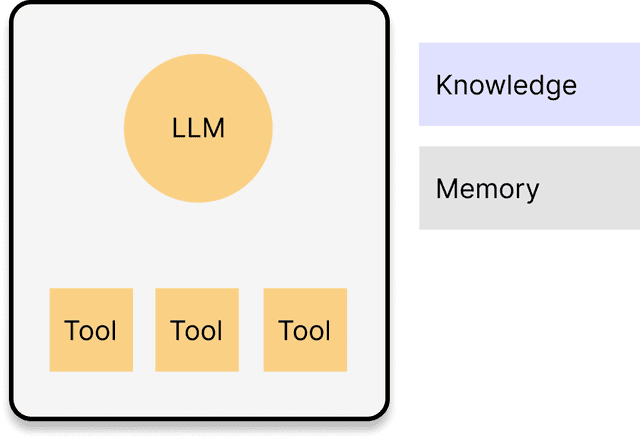

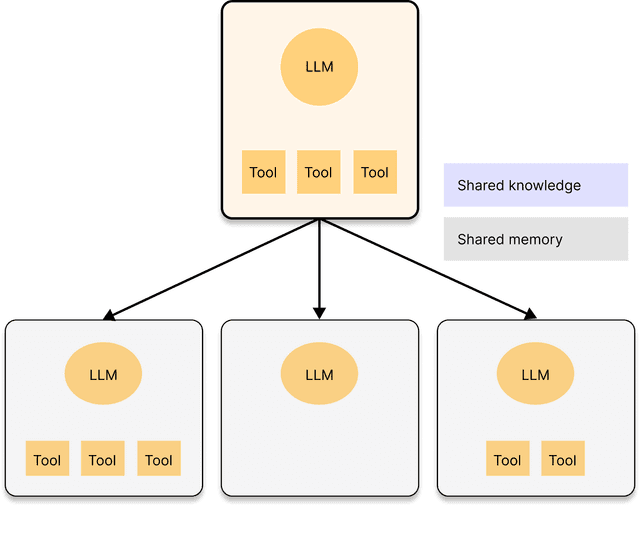

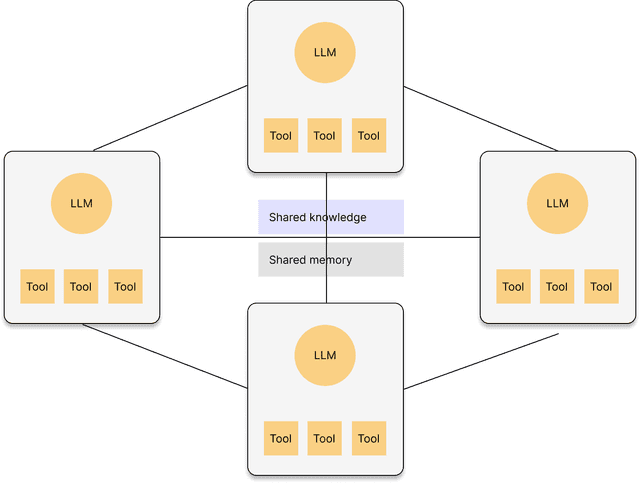

Agent Network

Agents adapt their formation based on task complexity.

You can specify a desired formation or allow the agents to determine it autonomously (default).

| Solo Agent | Supervising | Squad | Random | |

|---|---|---|---|---|

| Formation |  |  |  |  |

| Usage |

|

|

|

|

| Use case | An email agent drafts promo message for the given audience. | The leader agent strategizes an outbound campaign plan and assigns components such as media mix or message creation to subordinate agents. | An email agent and social media agent share the product knowledge and deploy multi-channel outbound campaign. | 1. An email agent drafts promo message for the given audience, asking insights on tones from other email agents which oversee other clusters. 2. An agent calls the external agent to deploy the campaign. |

Looking for Solutions?

- Deploying ML Systems 👉 Book a briefing session

- Hiring an ML Engineer 👉 Drop an email

- Learn by Doing 👉 Enroll AI Engineering Masterclass

Related Books

These books cover the wide range of ML theories and practices from fundamentals to PhD level.

Linear Algebra Done Right

Foundations of Machine Learning, second edition (Adaptive Computation and Machine Learning series)

Designing Machine Learning Systems: An Iterative Process for Production-Ready Applications

Machine Learning Design Patterns: Solutions to Common Challenges in Data Preparation, Model Building, and MLOps